KU Law School Spotlights AI for Lawyers Course and Student Research on AI in Legal Practice

Earlier this summer, visiting scholar Dr. Bakht Munir taught a five-day intensive course at KU Law. The innovative course, AI for Lawyers, provided students with a deep dive into artificial intelligence applications in legal practice. This specialized course was attended by seven KU Law students, who engaged in critical discussions and practical evaluations of AI tools in law.

Course Structure and Daily Activities

The five-day intensive course activities covered foundational concepts and applied case studies, ensuring students had a well-rounded understanding of AI in legal practice.

Day 1: Historical Evolution of AI

The course began with conceptualizing AI and its historical evolution, from ancient automata to modern generative language models.

The discussion covered key milestones in the evolution of artificial intelligence, beginning with the creation of the mechanical pigeon in 400 BCE by Archytas of Tarentum, an early example of automata. It then moved to the introduction of the term robot by Karel Čapek in 1921, and the creation of Japan’s first robot, Gakutensoku, by Professor Makoto Nishimura. The development of AI as a field gained momentum with Alan Turing’s Imitation Game, a test for computer intelligence, followed by John McCarthy’s 1956 conference, where the term "artificial intelligence" was formally introduced. AI continued to advance, marked by IBM’s Deep Blue defeating chess world champion Garry Kasparov, demonstrating early machine intelligence. The foundation of OpenAI in 2015 further accelerated AI innovation, leading to the rise of generative AI and Large Language Models, which now play a transformative role across industries.

This discussion provided a foundational basis for technology and how it inspired the creation of Artificial Intelligence.

Day 2: AI Tools and Hallucinations

On the second day of the course, each student demonstrated a different AI tool relevant to legal practice, providing live presentations on their respective tool’s capabilities, advantages and limitations. This hands-on approach allowed students to critically assess how AI can assist legal professionals while identifying potential weaknesses in its application.

Following the demonstrations, a deep discussion unfolded on the probabilistic nature of AI systems, particularly the challenges posed by their black box mechanisms, where the internal decision-making processes remain largely opaque. Students examined ethical concerns, such as data privacy, algorithmic bias and AI hallucinations, where AI generates fabricated or inaccurate legal content. This discussion reinforced the importance of verification and safeguards in legal research, ensuring that AI remains a supplementary tool rather than a substitute for human legal expertise.

Day 3: AI Ethics and Misuse Cases

The third day centered on ethical considerations, with a strong emphasis on bias and AI hallucinations. Discussions explored various types of bias in AI models, the underlying reasons for hallucinations and the different forms these inaccuracies take.

Students presented case summaries, including Mata v. Avianca, Inc., People v. Crabill, United States v. Michael Cohen, United States v. Pras Michel, and Iovino v. Michael Stapleton Associates, critically analyzing instances where AI-generated hallucinations, such as fictional citations, impacted legal proceedings.

To provide a global perspective, the discussion expanded to AI misuse cases encountered in Australia, Canada, the UK and Pakistan, comparing how different jurisdictions respond to AI-related legal challenges. By examining international regulatory approaches, students gained insight into the broader implications of AI use in legal practice and the varying degrees of accountability imposed worldwide.

Day 4: Standing Orders on the Use of AI in Legal Practice

On the fourth day, students conducted a critical analysis of over thirty courts’ standing orders, task force recommendations, policy guidelines, ethical regulations and judicial ethics frameworks across the USA, Canada, the UK, Australia and Pakistan. This examination highlighted the growing regulatory efforts aimed at governing AI-assisted legal research and writing, emphasizing the need for heightened accuracy and accountability in AI-generated legal documents.

In the United States, courts have taken varied approaches to AI regulation. While some jurisdictions prohibit AI-generated content in legal filings, others require attorneys to verify all citations and disclose AI usage. These measures reinforce the ethical obligations of legal professionals, ensuring safeguards against AI-generated hallucinations and inaccuracies that could compromise legal integrity.

In contrast, Canada, the UK, Australia and Pakistan have introduced judicial guidance and ethical policies that focus on responsible AI adoption rather than outright bans. Courts in these regions generally mandate transparency, requiring attorneys to disclose AI use, verify cited legal authorities and ensure accuracy in AI-assisted legal drafting, closely mirroring the regulatory stance taken by the United States.

The discussion underscored a global trend toward balancing AI innovation with robust regulatory safeguards, ensuring AI remains a complementary tool rather than an unregulated substitute for human legal expertise.

Day 5: Final Project - Evaluating AI Systems for Legal Accuracy

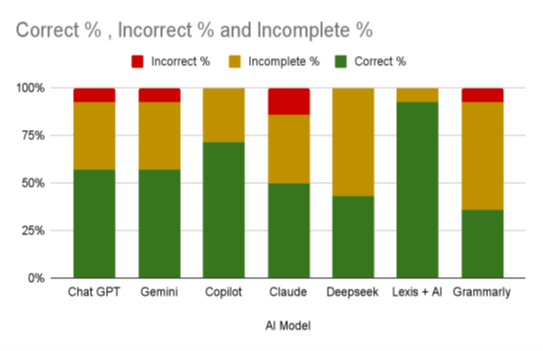

The culminating project aimed to assess AI tools' accuracy in legal reasoning. Each student tested one AI model — ChatGPT, Google Gemini, Microsoft Copilot, Claude, Deepseek, Lexis+ AI and Grammarly — by posing legal questions and comparing AI-generated responses to expert human answers.

Students evaluated responses as incorrect, incomplete or correct based on legal precision, clarity and doctrinal correctness. Below are the final project results:

Lexis+ AI outperformed other AI tools, aligning its responses with statutory law and case law references, while Grammarly, though useful for grammar and syntax, lacked substantive legal citations. Students also analyzed accuracy by legal questions, noting a strong correlation between AI errors and ambiguous phrasing. For instance, most models incorrectly answered the question on fault elements in Kansas, suggesting AI's difficulty in distinguishing nuanced jurisdiction-specific legal definitions.

Conclusion and Future Directions

This course exemplifies KU Law School’s commitment to preparing future lawyers for the evolving landscape of AI in legal practice. Through critical evaluation and practical engagement with AI tools, students have gained insights into both AI’s potential and its limitations in law. The findings of this project underscore the significance of AI verification measures, reinforcing the role of human legal reasoning in maintaining professional integrity. KU Law continues to lead in AI legal education, fostering discussions that shape the future of AI regulation and ethical legal practice.